Insights 7: Open Source Large Models, Vespa

AI in Practice

A Modern Search Platform: Vespa.ai

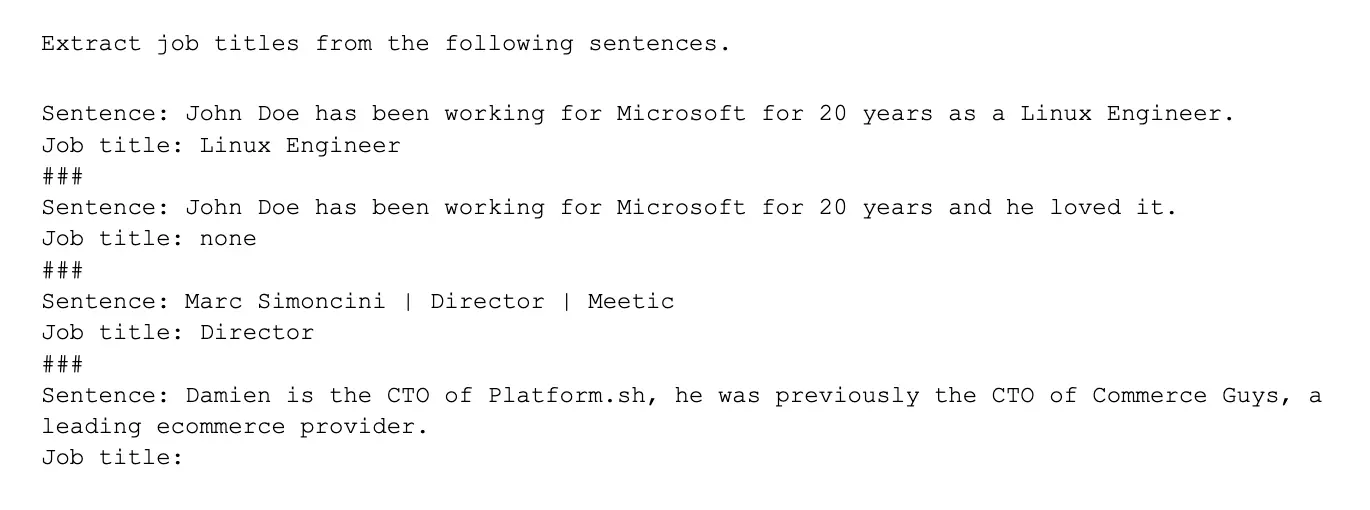

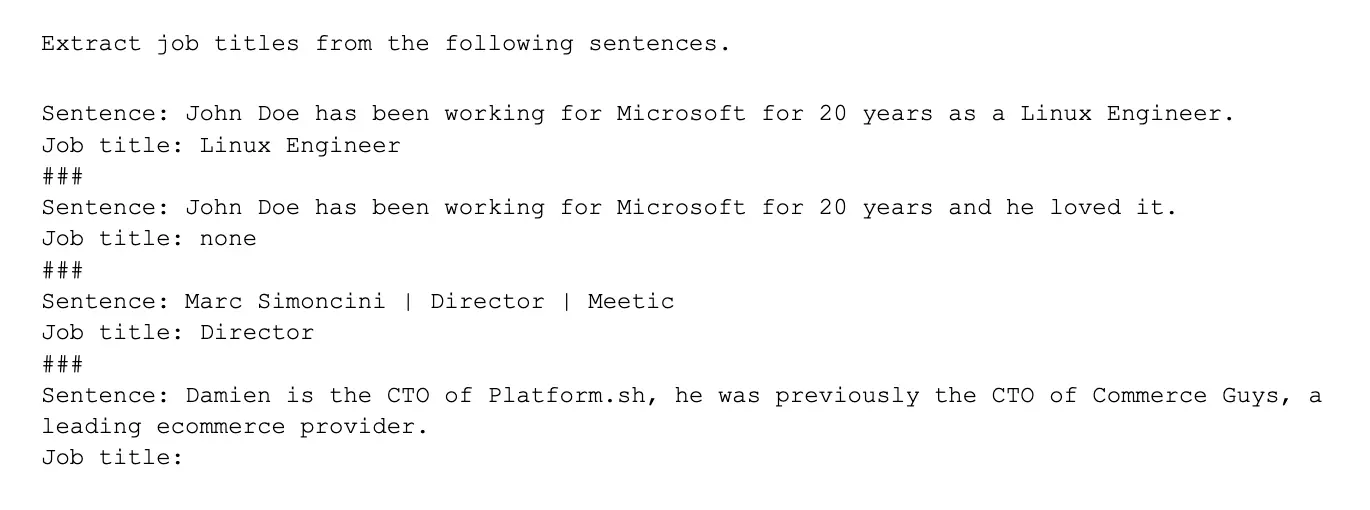

Few-shot NER

Open GPT: EleutherAI, BigScience, and PolyCoder

In addition to EleutherAI, a noteworthy effort is BigScience:

Big Tech AI

Size matters, not just with models, but also with training data.

With GPT-3, models got large in a hurry, so there are plenty of rooms for all sorts of optimization. Google (DeepMind) published a paper showing that the recent crops of large models (GPT-3, AI21’s Jurassic, Microsoft/NVIDIA’s MegaTron-Turing, DeepMind’s own Gopher, etc.) are undertrained. By keeping the number of training data roughly in proportion with model size, the DeepMind team shows nice performance improvement. As a case study, they introduced a new model called Chinchilla that has 70B parameters that outperforms 280B-parameter Gopher simply because it was trained on 4x more data.Hyperparameter tuning for large models.

When a neural network is too large to pretrain more than once, tuning its hyperparameters is practically impossible. Enter Microsoft Research which came up with a cool idea called . In a case study, Microsoft shows that μTransfer significantly improves the performance of a 6.7B-parameter GPT-3 with only 7% compute overhead. This model matches the performance of a 14B-parameter model that did not use μTransfer.Go deeper.

The adjective “deep” in deep learning signifies the fact that modern neural networks have a lot more layers compared to those in the pre-deep learning era. The question of how deep we should go remains interesting. Training extremely deep transformer networks has been plagued with instability. Microsoft Research published a paper titled “DeepNet: Scaling Transformers to 1,000 Layers”, which represents an order of magnitude deeper than SOTA. The interesting quote is: "Remarkably, on a multilingual benchmark with 7,482 translation directions, our 200-layer model with 3.2B parameters significantly outperforms the 48-layer state-of-the-art model with 12B parameters by 5 BLEU points, which indicates a promising scaling direction."(Cost-)Efficient serving.

Fine-tuning pre-trained models is common in NLP, but forking the model for each task can be expensive in production where many fine-tuned models are served. To address this problem, Google came up with the idea ofprompt tuning

which adds a small set of learnable vectors to the input and can match fine-tuning quality while sharing the same frozen model across all tasks.Size matters, again.

Google joined the rare group of those that pretrain a 500B-parameter model. Their entrant is Pathways Language Model (PaLM), a 540B-parameter model that (of course) surpasses the performance of every large model that came before it. It can even explain jokes. It’s so good that we have to reshare here: Meta AI also shared their latest work on scaling up models: . Meta described it as a better, fairer computer vision through self-supervised learning on diverse datasets, with 10x more parameters compared to its predecessor.Real-world deployment.

Microsoft made some advances in machine translation, productionizing a mixture-of-experts architecture called Z-code. The new update now supports 100 languages. They aim to roll out support for about 1,500 low-resource languages in the future.Ethical issues with large models.

A new paper from Google research found that large models have a memorization problem that is larger than expected, and more serious the larger the model.TL;DR

for entrepreneurs looking to apply cutting edge AI: the pace of innovation in AI continues to be rapid. We expect many of these advances will show up as more powerful capabilities that technologists can tap into in building real-world applications. At the AI2 incubator, we strive to help founders navigate this fast changing landscape in their journeys building the next enduring companies.Additional Readings That We Found Interesting

- Inside the AI2 Incubator: Microsoft co-founder’s unfinished legacy fuels quest for new AI startups

- How Does AI Improve Human Decision-Making? Evidence from the AI-Powered Go Program

- Companies are commercializing multimodal AI models to analyze videos and more

- How Observability Uncovers the Effects of ML Technical Debt

- Farwell, Big Data

- Stanford Institute for Human-Centered Artificial Intelligence 2020–2021 Annual Report

- 12 Graphs That Explain the State of AI in 2022

- Gradient boosted trees are often the best tool for tabular data and now, they’re easy to use in TensorFlow!

- A Wave Of Billion-Dollar Language AI Startups Is Coming

→

Insights 7: Open Source Large Models, Vespa

AI in Practice

A Modern Search Platform: Vespa.ai

Few-shot NER

Open GPT: EleutherAI, BigScience, and PolyCoder

In addition to EleutherAI, a noteworthy effort is BigScience:

Big Tech AI

Size matters, not just with models, but also with training data.

With GPT-3, models got large in a hurry, so there are plenty of rooms for all sorts of optimization. Google (DeepMind) published a paper showing that the recent crops of large models (GPT-3, AI21’s Jurassic, Microsoft/NVIDIA’s MegaTron-Turing, DeepMind’s own Gopher, etc.) are undertrained. By keeping the number of training data roughly in proportion with model size, the DeepMind team shows nice performance improvement. As a case study, they introduced a new model called Chinchilla that has 70B parameters that outperforms 280B-parameter Gopher simply because it was trained on 4x more data.Hyperparameter tuning for large models.

When a neural network is too large to pretrain more than once, tuning its hyperparameters is practically impossible. Enter Microsoft Research which came up with a cool idea called . In a case study, Microsoft shows that μTransfer significantly improves the performance of a 6.7B-parameter GPT-3 with only 7% compute overhead. This model matches the performance of a 14B-parameter model that did not use μTransfer.Go deeper.

The adjective “deep” in deep learning signifies the fact that modern neural networks have a lot more layers compared to those in the pre-deep learning era. The question of how deep we should go remains interesting. Training extremely deep transformer networks has been plagued with instability. Microsoft Research published a paper titled “DeepNet: Scaling Transformers to 1,000 Layers”, which represents an order of magnitude deeper than SOTA. The interesting quote is: "Remarkably, on a multilingual benchmark with 7,482 translation directions, our 200-layer model with 3.2B parameters significantly outperforms the 48-layer state-of-the-art model with 12B parameters by 5 BLEU points, which indicates a promising scaling direction."(Cost-)Efficient serving.

Fine-tuning pre-trained models is common in NLP, but forking the model for each task can be expensive in production where many fine-tuned models are served. To address this problem, Google came up with the idea ofprompt tuning

which adds a small set of learnable vectors to the input and can match fine-tuning quality while sharing the same frozen model across all tasks.Size matters, again.

Google joined the rare group of those that pretrain a 500B-parameter model. Their entrant is Pathways Language Model (PaLM), a 540B-parameter model that (of course) surpasses the performance of every large model that came before it. It can even explain jokes. It’s so good that we have to reshare here: Meta AI also shared their latest work on scaling up models: . Meta described it as a better, fairer computer vision through self-supervised learning on diverse datasets, with 10x more parameters compared to its predecessor.Real-world deployment.

Microsoft made some advances in machine translation, productionizing a mixture-of-experts architecture called Z-code. The new update now supports 100 languages. They aim to roll out support for about 1,500 low-resource languages in the future.Ethical issues with large models.

A new paper from Google research found that large models have a memorization problem that is larger than expected, and more serious the larger the model.TL;DR

for entrepreneurs looking to apply cutting edge AI: the pace of innovation in AI continues to be rapid. We expect many of these advances will show up as more powerful capabilities that technologists can tap into in building real-world applications. At the AI2 incubator, we strive to help founders navigate this fast changing landscape in their journeys building the next enduring companies.Additional Readings That We Found Interesting

- Inside the AI2 Incubator: Microsoft co-founder’s unfinished legacy fuels quest for new AI startups

- How Does AI Improve Human Decision-Making? Evidence from the AI-Powered Go Program

- Companies are commercializing multimodal AI models to analyze videos and more

- How Observability Uncovers the Effects of ML Technical Debt

- Farwell, Big Data

- Stanford Institute for Human-Centered Artificial Intelligence 2020–2021 Annual Report

- 12 Graphs That Explain the State of AI in 2022

- Gradient boosted trees are often the best tool for tabular data and now, they’re easy to use in TensorFlow!

- A Wave Of Billion-Dollar Language AI Startups Is Coming

→

Join our newsletter

→

Join our newsletter

→

Join our newsletter

→